Aug 17, 2023I looked around a bit in the Transformers source code and found a function called is_bitsandbytes_available() which only returns true if bitsandbytes is installed and torch.cuda.is_available(), which is not the case on an Apple Silicon machine.

Discovering Computers – Tools, Apps, Devices and the Impact of Technology 2016 – Flip eBook Pages 1-50 | AnyFlip

Aug 10, 2022and take note of the Cuda version that you have installed. Then you can install bitsandbytes via: # choices: cuda92, cuda 100, cuda101, cuda102, cuda110, cuda111, cuda113 # replace XXX with the respective number pip install bitsandbytes-cudaXXX. To check if your installation was successful, you can execute the following command, which runs a

Source Image: reddit.com

Download Image

warn (“The installed version of bitsandbytes was compiled without GPU support. I have downloaded the cpu version as I do not have a Nvidia Gpu, although if its possible to use an AMD gpu without Linux I would love that. Does anybody know how to fix this? Sort by: Add a Comment Nandflash • 10 mo. ago

Source Image: github.com

Download Image

Unleash the Power of AMD GPU’s for Lightning Fast SD Model Training in DreamBooth! I just wanted to try a supposedly local ChatGPT for fun. I used the one-click install method found on the webUI’s github page. That being said, I have been getting these 2 errors : “The installed version of bitsandbytes was compiled without GPU support.” and “AssertionError: Torch not compiled with CUDA enabled”.

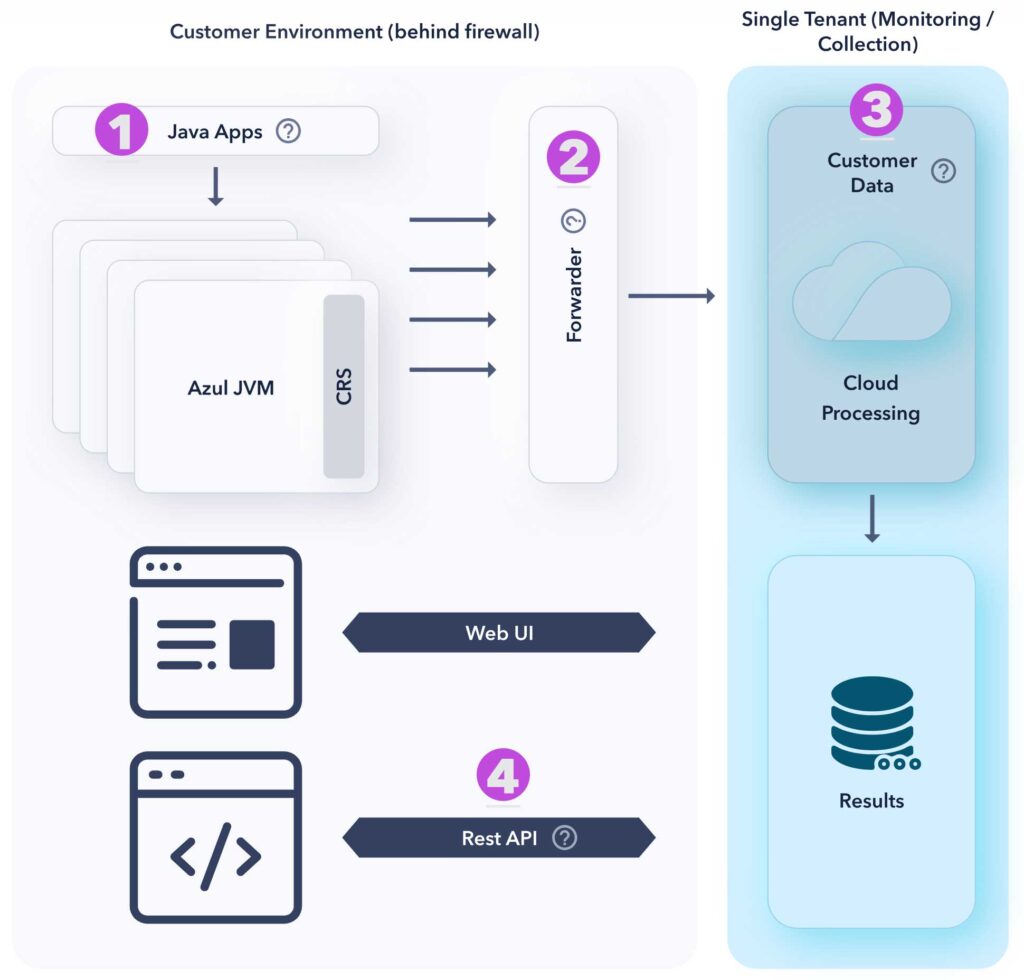

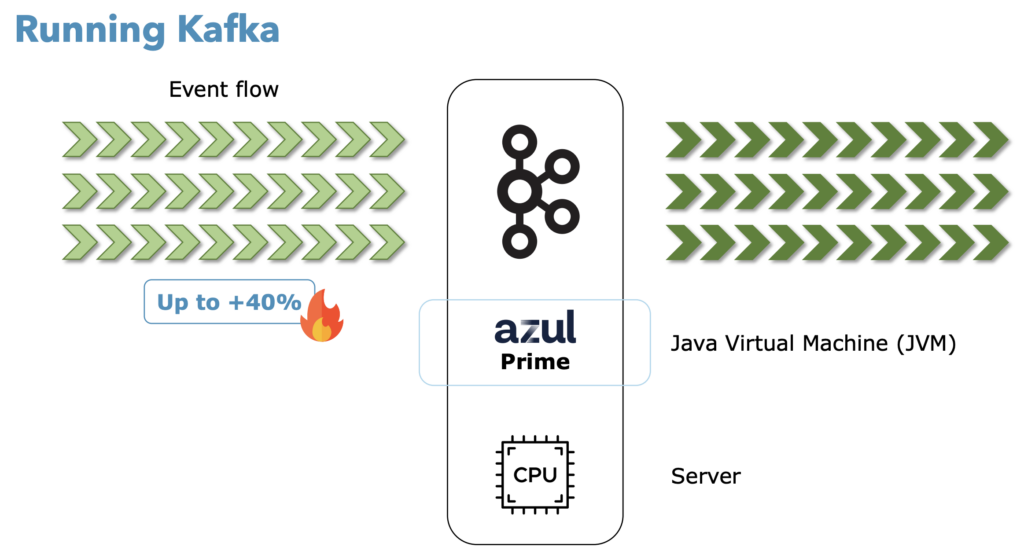

Source Image: azul.com

Download Image

The Installed Version Of Bitsandbytes Was Compiled Without Gpu Support.

I just wanted to try a supposedly local ChatGPT for fun. I used the one-click install method found on the webUI’s github page. That being said, I have been getting these 2 errors : “The installed version of bitsandbytes was compiled without GPU support.” and “AssertionError: Torch not compiled with CUDA enabled”. Aug 25, 2022Actions Projects bitsandbytes was compiled without GPU support. 8-bit optimizers and GPU quantization are unavailable. #26 Closed vjeronymo2 opened this issue on Aug 25, 2022 · 6 comments vjeronymo2 commented on Aug 25, 2022 the CUDA driver is not detected ( libcuda.so) the runtime library is not detected ( libcudart.so)

of service, and decided to break up with Oracle.

Jan 7, 2024Installation: pip install bitsandbytes In some cases it can happen that you need to compile from source. If this happens please consider submitting a bug report with python -m bitsandbytes information. What now follows is some short instructions which might work out of the box if nvcc is installed. If these do not work see further below. Devindi Gamlath – Undergraduate – Sri Lanka Technological Campus | LinkedIn

Source Image: lk.linkedin.com

Download Image

of service, and decided to break up with Oracle. Jan 7, 2024Installation: pip install bitsandbytes In some cases it can happen that you need to compile from source. If this happens please consider submitting a bug report with python -m bitsandbytes information. What now follows is some short instructions which might work out of the box if nvcc is installed. If these do not work see further below.

Source Image: azul.com

Download Image

Discovering Computers – Tools, Apps, Devices and the Impact of Technology 2016 – Flip eBook Pages 1-50 | AnyFlip Aug 17, 2023I looked around a bit in the Transformers source code and found a function called is_bitsandbytes_available() which only returns true if bitsandbytes is installed and torch.cuda.is_available(), which is not the case on an Apple Silicon machine.

Source Image: anyflip.com

Download Image

Unleash the Power of AMD GPU’s for Lightning Fast SD Model Training in DreamBooth! warn (“The installed version of bitsandbytes was compiled without GPU support. I have downloaded the cpu version as I do not have a Nvidia Gpu, although if its possible to use an AMD gpu without Linux I would love that. Does anybody know how to fix this? Sort by: Add a Comment Nandflash • 10 mo. ago

Source Image: toolify.ai

Download Image

pip install bitsandbytes gpu – YouTube Issue When I run the following line of code: pipe = pipeline(model=name, model_kwargs= “device_map”: “auto”, “load_in_8bit”: True, max_new_tokens=max_new_tokens) I

Source Image: m.youtube.com

Download Image

can not get UI running – “The installed version of bitsandbytes was compiled without GPU support” · Issue #1931 · oobabooga/text-generation-webui · GitHub I just wanted to try a supposedly local ChatGPT for fun. I used the one-click install method found on the webUI’s github page. That being said, I have been getting these 2 errors : “The installed version of bitsandbytes was compiled without GPU support.” and “AssertionError: Torch not compiled with CUDA enabled”.

Source Image: github.com

Download Image

Kohya_SS errors “libcudart.so not found” “CUDA setup failed” “CalledProcessError” – Ask Ubuntu Aug 25, 2022Actions Projects bitsandbytes was compiled without GPU support. 8-bit optimizers and GPU quantization are unavailable. #26 Closed vjeronymo2 opened this issue on Aug 25, 2022 · 6 comments vjeronymo2 commented on Aug 25, 2022 the CUDA driver is not detected ( libcuda.so) the runtime library is not detected ( libcudart.so)

Source Image: askubuntu.com

Download Image

of service, and decided to break up with Oracle.

Kohya_SS errors “libcudart.so not found” “CUDA setup failed” “CalledProcessError” – Ask Ubuntu Aug 10, 2022and take note of the Cuda version that you have installed. Then you can install bitsandbytes via: # choices: cuda92, cuda 100, cuda101, cuda102, cuda110, cuda111, cuda113 # replace XXX with the respective number pip install bitsandbytes-cudaXXX. To check if your installation was successful, you can execute the following command, which runs a

Unleash the Power of AMD GPU’s for Lightning Fast SD Model Training in DreamBooth! can not get UI running – “The installed version of bitsandbytes was compiled without GPU support” · Issue #1931 · oobabooga/text-generation-webui · GitHub Issue When I run the following line of code: pipe = pipeline(model=name, model_kwargs= “device_map”: “auto”, “load_in_8bit”: True, max_new_tokens=max_new_tokens) I